CHECK OUT SYLLABUS

Download Microsoft Azure Developer Syllabus

You can download FREE syllabus for Microsoft Azure Developer Associate International Standard Course and Decide to join our Expert Lead Live Training Program. After the Training, you will be treated as a Azure Developer.

Our trainers are seasoned professionals with extensive experience in Microsoft Azure and cloud technologies. They bring real-world insights and hands-on expertise to the training sessions, ensuring you gain practical knowledge that goes beyond theoretical concepts.

We provide interactive live training sessions that foster an engaging and collaborative learning environment. You can ask questions, participate in discussions, and get immediate feedback from instructors, enhancing your understanding of complex topics.

This Intructor Led Training covers dual Certification AZ900 and AZ204 to have an idepth knowledge on Azure.

Why to choose Smartechie?

SmarTechie stands out as a premier live training service provider specializing in Microsoft Azure and cloud technologies. Here are compelling reasons to choose SmarTechie for your training needs

Expert Trainers

Our trainers are seasoned professionals with extensive experience in Microsoft Azure and cloud technologies. They bring real-world insights and hands-on expertise to the training sessions, ensuring you gain practical knowledge that goes beyond theoretical concepts.

TALK TO TRAINER

Interactive Live Training

We provide interactive live training sessions that foster an engaging and collaborative learning environment. You can ask questions, participate in discussions, and get immediate feedback from instructors, enhancing your understanding of complex topics.

BOOK SEAT

Comprehensive Curriculum

SmarTechie offers a meticulously designed curriculum that covers all aspects of Microsoft Azure and cloud technologies. Whether you are a beginner or an experienced professional, our courses are structured to meet your learning needs and help you achieve your career goals.

DOWNLOAD SYLLABUS

Microsoft Azure Developer Associate Benefits

Higher Salary

With this renowned credential, aspirants earn higher salary packages when compared to non-certified professionals in the field.

Individual accomplishmentsAspirants can look for higher career prospects at an early stage in their life with the most esteemed certification.

Gain credibility

Owning the certification makes it easier to earn the trust and respect of professionals working in the same field.

What is Microsoft Azure Developer Associate Certification?

The Microsoft Azure Developer Associate Certification is designed for cloud developers and professionals carrying out tasks on cloud platform. This certification enables cloud developers to gain expertise in 5 major Azure domains. On attaining the certification, professionals can take up the role of Azure developer and assist organizations in managing Azure cloud services.

DOWNLOAD FREE SYLLABUS

Get a Callback from our Team

Check out our FREE Blogs on Microsoft Azure

Here you can read and Watch Free technical articles and videos to upskill yourself.

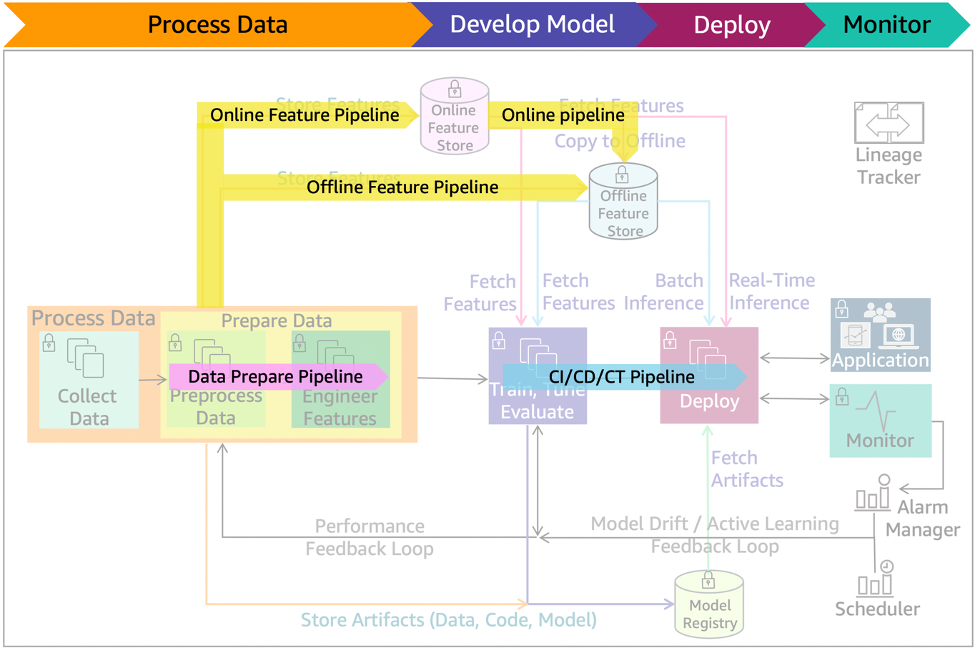

Secrets of Continuous ML Training in Production

Yet Another Perspective on Continuous ML Training in Production Machine Learning (ML) has revolutionized how we approach problems in industries ranging from healthcare to finance, retail, and beyond. However, getting[…]

Read moreBuilding Azure Resources with a Single Command (I-a-C)

Introduction Infrastructure as Code (IaC) is a powerful practice that allows you to manage and provision your infrastructure using code, rather than manual processes. By treating infrastructure as code, you[…]

Read moreWhat Are The Top AI/ML Algorithms Used by Tech Giants ?

Artificial Intelligence (AI) and Machine Learning (ML) are no longer just buzzwords. They are the core technologies reshaping how businesses operate, products are developed, and services are delivered. Leading tech[…]

Read moreSTART YOUR CLOUD JOURNEY

You can now lead as a Cloud Expert with our curated Live Training program

Join our Upcoming batch to upskill yourself on Microsoft Azure Cloud Techonology. Here is our Upcoming batch Information given. You can book Your Seat.

BOOK A SEATOngoing Batch ….

Date : Dec 10, 2024 | 7AM – 8AM IST | Mon-Fri | Daily 1 Hour | For 45 Days

Upcoming Batch Schedule (Registration Open)

Date : Jan 20, 2025 | 7AM – 8AM IST | Mon-Fri | Daily 1 Hour | For 45 Days